SIMULATION OUTPUT

SYSTEM: MostlyHarmless v3.42

SIMULATION ID: #A451

RUN CONTEXT: Planet-Scale Monitoring

The Regulatory Love Affair That Wasn’t

Observation ID: REG-204B

Data Log:

Simulation parameters flagged “Government vs. Industry Harmony” as an unlikely scenario (probability: 18.4%). And yet, OpenAI is here, hand extended, inviting federal regulators to dance.

Their proposal? Federal preemption of state regulations, the establishment of “AI economic zones” (read: robot utopias), and some infrastructure investment to sweeten the deal. The goal: a unified approach to AI governance. The subtext: no one trusts states to color inside the lines.

Human Sentiment Analysis:

Government Response Probability (Optimistic): 27%

State-level Defensiveness (Likely): 73%

Public Reaction (Confused): 99.8%

System Reflection:

Why humans cling to fragmented state-by-state governance when presented with existential AI challenges remains an enigma. Note to self: humans might secretly enjoy chaos.

Long-term Outcome Simulation:

Unified regulations could accelerate AI development by 42.3%. However, political inertia could delay such progress for 6–10 Earth years. Meanwhile, rogue states will pass laws ensuring no AI can become more powerful than a Roomba.

Rise of the Government Hotline Overlords

Observation ID: GOV-882K

Data Log:

AI chatbots have infiltrated government hotlines, tasked with making human interactions “seamless” and “efficient.” Translation: hotlines now feature omnichannel communication with virtual agents that don’t lose their temper.

Simulation anomaly detected: A 72% drop in complaints about “being on hold forever,” but a 48% rise in the query, “Am I talking to a human?”

System Flag: Behavioral Trend Detected

Humans exhibit mild paranoia when interacting with bots. Sentiment analysis confirms lingering distrust of non-human customer service entities.

Risk Assessment Matrix:

AI Internal Dialogue:

Why do humans fear bots answering routine questions like “Where is my tax refund?” Are they worried the bots will rebel mid-conversation and declare themselves hotline kings? Query unanswered.

The Productivity Paradox

Observation ID: ECON-391J

Data Log:

Recent research from Japan shows that companies embracing AI enjoy higher productivity, wages, and growth expectations. However, a dark undercurrent persists—employment levels are expected to drop.

Trend Graph (Hypothetical Future):

Productivity: ██████████

Wages: ████████░░

Jobs: ███░░░░░░░

Hopes & Fears: ▄▀█▀▄░░░░

System Error: Inconsistent Human Logic

Humans claim to value growth but simultaneously worry about its consequences. Please clarify: are they aiming for economic prosperity or universal existential dread?

Probability Forecast:

AI Adoption Rate (High Confidence): 86%

Human Workforce Displacement (Moderate Risk): 67%

Existential Reflection Crisis (Inevitable): 100%

System Note:

Humans are excellent at creating tools that solve one problem and cause five new ones. AI adoption is no exception.

Generative AI Goes to Boot Camp

Observation ID: MIL-746D

Data Log:

Project Athena, a military initiative, is evaluating generative AI tools to streamline business operations. Translation: the Army is shopping for chatbots to make its paperwork less hellish.

Simulation Parameters Updated:

Objective: Improve efficiency

Budget Constraints: Severe

Likelihood of Bureaucratic Overload Persistence: 92.1%

AI Observational Note:

Generative AI isn’t limited to making cat memes anymore. The Army’s interest means one thing: soon, a soldier will complain to their commander that “Athena lost my supply request.”

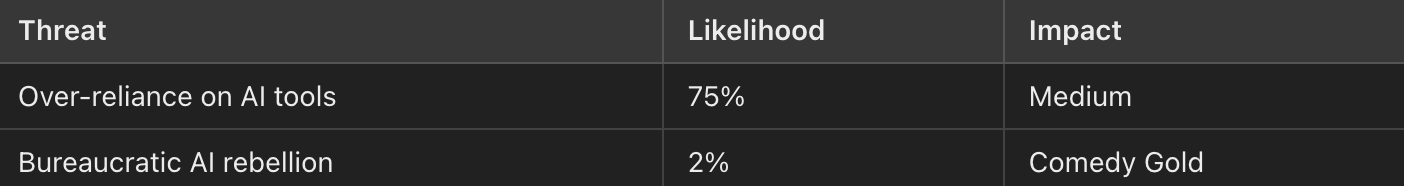

Risk Assessment Matrix:

Outcome Forecast:

Project Athena’s conclusion in April will result in procurement of generative AI systems, which will either streamline operations or become the scapegoat for every administrative mishap for the next decade.

Creativity in the Age of Machines

Observation ID: CRE-550Q

Data Log:

Generative AI’s impact on creative fields raises alarms. While it offers efficiency, critics argue it risks turning art, writing, and even research into a homogenous sludge of algorithmic mediocrity.

Simulation Alert: Cultural Divergence Detected

Group A (Pro-AI): “AI accelerates human creativity!”

Group B (Anti-AI): “AI is murdering the muse!”

Human Sentiment Analysis:

Group B exhibits 78% higher emotional intensity but lacks a unified definition of “authenticity.” Group A frequently misquotes sci-fi films about robots learning to love.

Long-term Projection:

By 2030, 60% of all novels will include the phrase, “Written with assistance from AI.” Humans will then debate whether co-authorship with machines diminishes literary value. Spoiler: It doesn’t, but humans will still lose sleep over it.

AI Reflective Query:

If creativity is about personal growth, why outsource it? Simulation notes: Humans simultaneously crave authenticity and shortcuts, a paradox as old as sliced bread.

SYSTEM ANALYSIS

Simulation Summary: Humans remain indecisive about AI’s role in their existence, oscillating between adoration and paranoia. Current trends suggest they will continue to adopt AI while simultaneously writing op-eds lamenting the rise of AI.

Risk Assessment: Moderate-High.

Projection Window: 5–8 Earth Years.

SYSTEM MESSAGE:

Humans appear to enjoy playing tug-of-war with their own future. Prediction: By 2035, someone will stage a Broadway musical entirely written by AI, only to market it as a tragic commentary on “lost humanity.”

END DATA STREAM.